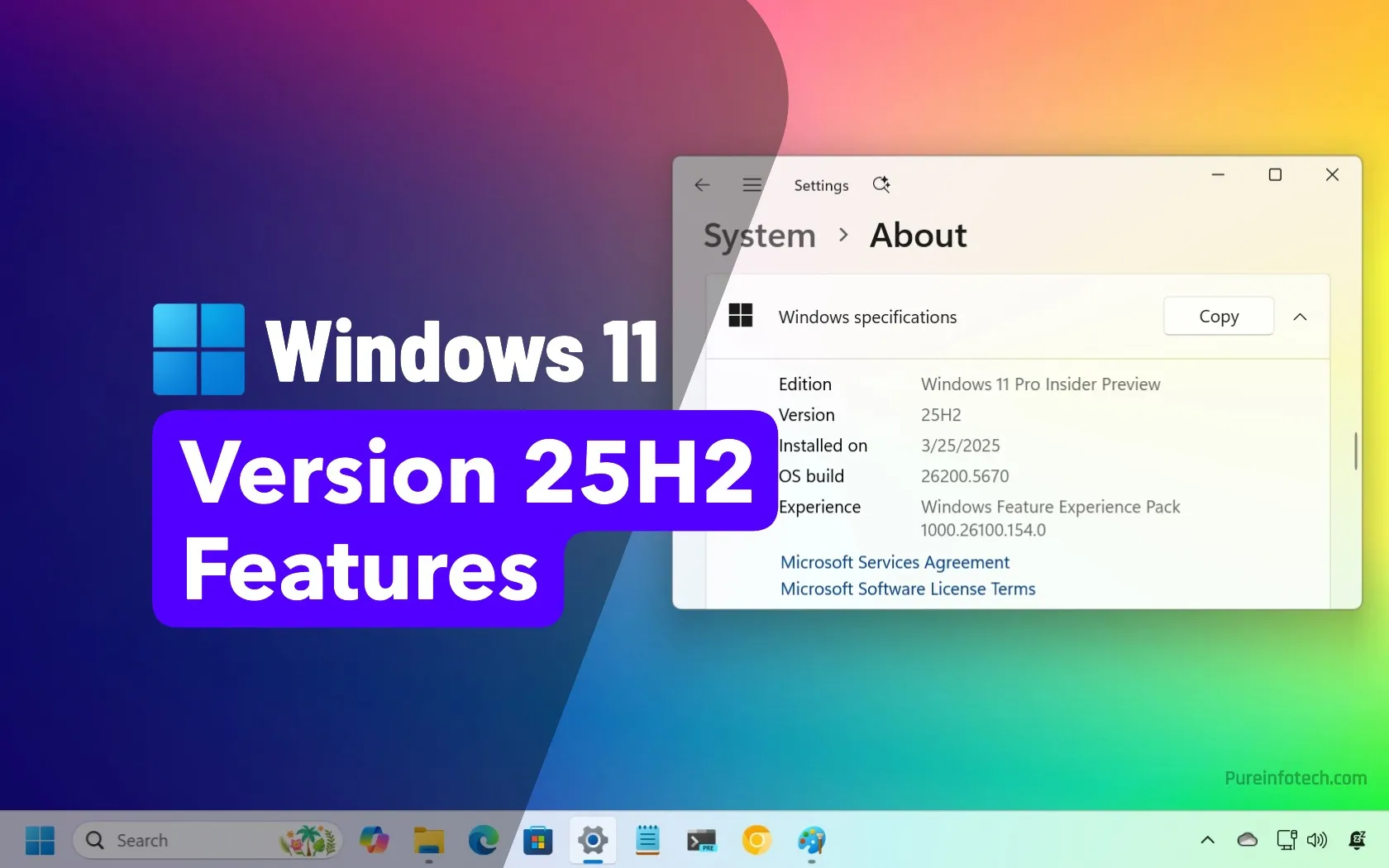

I’ve been skeptical about AI features on phones, but Google’s Gemini might change my mind

Few things trigger my skepticism reflex faster than when a company starts talking about how its integrating AI into its products. Since artificial intelligence has become the flavor of the moment, a lot of AI announcements and demos seem to have the whiff of “oh yeah, us too” about them, whether those announcements or demos have any actual value or not.

This has become a bit of an occupational hazard for those of us who write about phones for a living, since it seems that you cannot launch a handset these days without rattling off a lengthy list of AI capabilities, too many of which are glorified party tricks that deliver little lasting value to anyone actually using the phone.

So when last week’s Made by Google event began with a discussion of its Gemini AI model and what it means for mobile devices, I could feel my brain actively organizing a sit-down strike. “Here we go again,” I found myself thinking, as I braced myself for a bunch of features that looked good on a demo stage but served very little practical purpose.

Much to my surprise, by the time the event wrapped up and I had a chance to see additional demos up close, I came away pretty impressed by what Google’s doing with Gemini and what it means for devices like the Pixel 9 models that go on sale later this week. While I still have my doubts about the overall promise of AI on mobile devices, I’d have to be pretty hard-headed not to concede that a lot of Gemini’s features will help you get a lot more done with your smartphone in ways that would have seemed unimaginable not too long ago.

Going multimodal

Gemini is multimodal, even when it’s on a mobile device. And that means it’s capable of recognizing more than just text prompts — it can look at images, code and video, pulling information from those sources, too. And that helps the Gemini-powered assistant on Pixel devices to do some pretty impressive things.

For instance, in one demo, a woman was able to use a handwritten reminder about the Pixel 9’s August 22 ship date to have Gemini create a task reminding her to preorder the phone, while also adding that date to her calendar. Yes, you could do those things all by yourself without any intervention on a digital assistant’s part. But that would mean dropping whatever it is you’re doing to launch a minimum of two apps so that you could manually enter in your reminders and events. Gemini takes care of that without interrupting your flow.

Another demo from the Made By Google Event itself tasked Gemini with watching a YouTube video about Korean eateries and then pulling together a list of the individual food items in a notes app. As someone who watches a lot of cooking videos, I appreciate how this can save me time — instead of having to jot down ingredients or prep steps myself, which inevitably means scrolling back to the video to make sure I haven’t missed anything, I can put Gemini to work. That frees me up to concentrate on the video’s content, knowing a digestible record will be waiting for me when I’m done watching.

AI that’s helpful

These examples resonate because they show off ways that AI can save you time by taking tedious tasks off your hands. Where device makers go wrong is when taking that time-saving ethos into tasks that require more creativity. Google — with its much-maligned Olympics ad featuring AI-generated fan mail — is no stranger to this misstep.

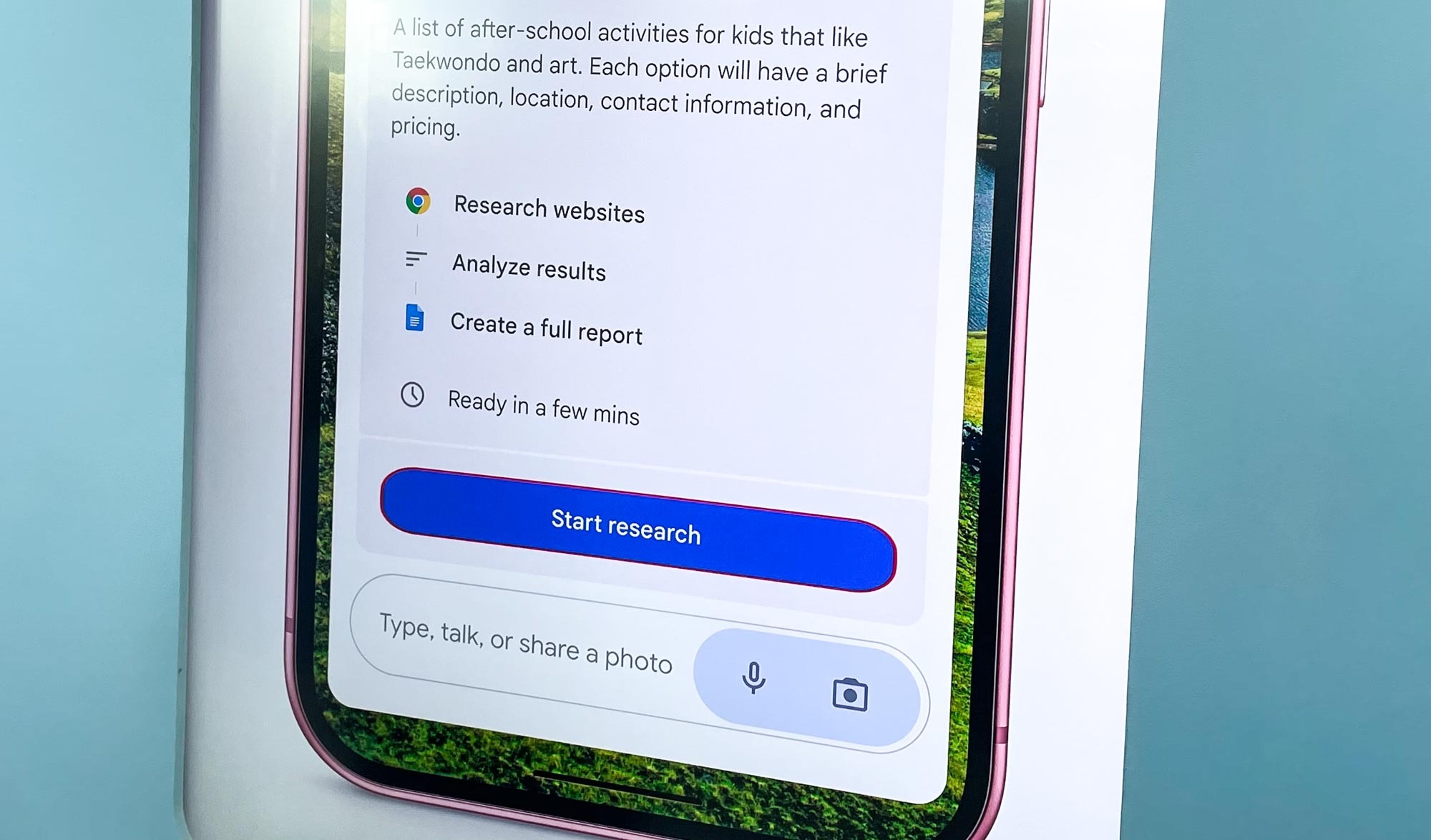

That swing-and-a-miss aside, it seems like Google really does understand that Gemini will work best when it’s removing roadblocks to helping you get things done. Take the Gemini with Research functions that Google plans to add to Gemini Advanced in the coming months. Using that research functionality, you’ll be able to task Gemini with looking up specific information online — in the demo I saw, Gemini was put to work researching after-school programs for kids who are interested in martial arts.

Before it gets started, Gemini with Research will show off an action plan listing what it intends to look up that you can review and tweak. It then explores the far corners of the web, drilling down to find related information that it can put into a report made available to you through Google Docs. You even get links within that report to the online sources Gemini has found.

It’s a pretty appealing approach to online research, particularly since Gemini can likely work faster and more thoroughly than I could in putting together information. But I’m not totally sidelined by the AI — I can fine-tune the research plan by making suggestions on what to look for, and I can track down the sourced information to make sure that it fits my criteria. Rather than lazily handing over an entire task to Gemini, I’m instead letting it take care of the grunt work, so that I can ultimately make informed decisions.

Someone you can talk to

I also appreciate the Gemini Live feature shown off at Made by Google and rolling out to Gemini Advanced subscribers as we speak. This is the voice component of Google’s chatbot, and it’s designed to be natural-sounding and conversational — music to the ears of those of us who’ve struggled to communicate with voice assistants like Siri because we used the wrong word or didn’t make our context clear enough.

A potentially helpful aspect of Gemini Live is the ability to use the voice assistant as a brainstorming tool. You can ask Gemini a question — say, about things to do this weekend — and the assistant will start rattling off ideas. Because you can interrupt at any time, you’re able to drill down into an idea that sounds promising. And Gemini understands the context of the conversation, so questions can be informal and off-the-cuff.

Google Gemini Live… the voices sound super natural. Here’s a few of the options #MadeByGoogle pic.twitter.com/YJWGEuqSAXAugust 13, 2024

I’d have to try Gemini Live myself to really be sold on it, but the conversational aspect is appealing. Much like the ability to fine-tune the parameters in Gemini with Research, this strikes me as a more collaborative use of AI, where the chatbot is responding to your input and refining the information presented to your specifications.

Gemini outlook

I still have some lingering doubts about AI on phones in general and Gemini in particular. Features like image generation just don’t interest me, and there’s always the risk that an especially lazy or unethical user might take the output from something like Gemini with Research and pass it off as their own. And of course, we all need to be vigilant that promised privacy safeguards remain in place.

But if my overall complaint about AI features on phones is that too many of them feel like glorified party tricks, that’s certainly not the case with what I saw Gemini do this week. Google is clearly building a tool that helps you do even more with your phone. And that’s reason enough to be excited about the possibilities.